Memory-efficient Transformer-based network model for TravelingSalesman Problem

Hua Yang, Minghao Zhao, Lei Yuan, Yang Yu, Zhenhua Li, and Ming Gu. "Memory-efficient Transformer-based network model for Traveling Salesman Problem.", Neural Networks 161 (2023): 589-597.

计算复杂度是二次的,

内存需求过高,导致内存不足。

强化学习中马尔可夫决策过程的变量为:状态,

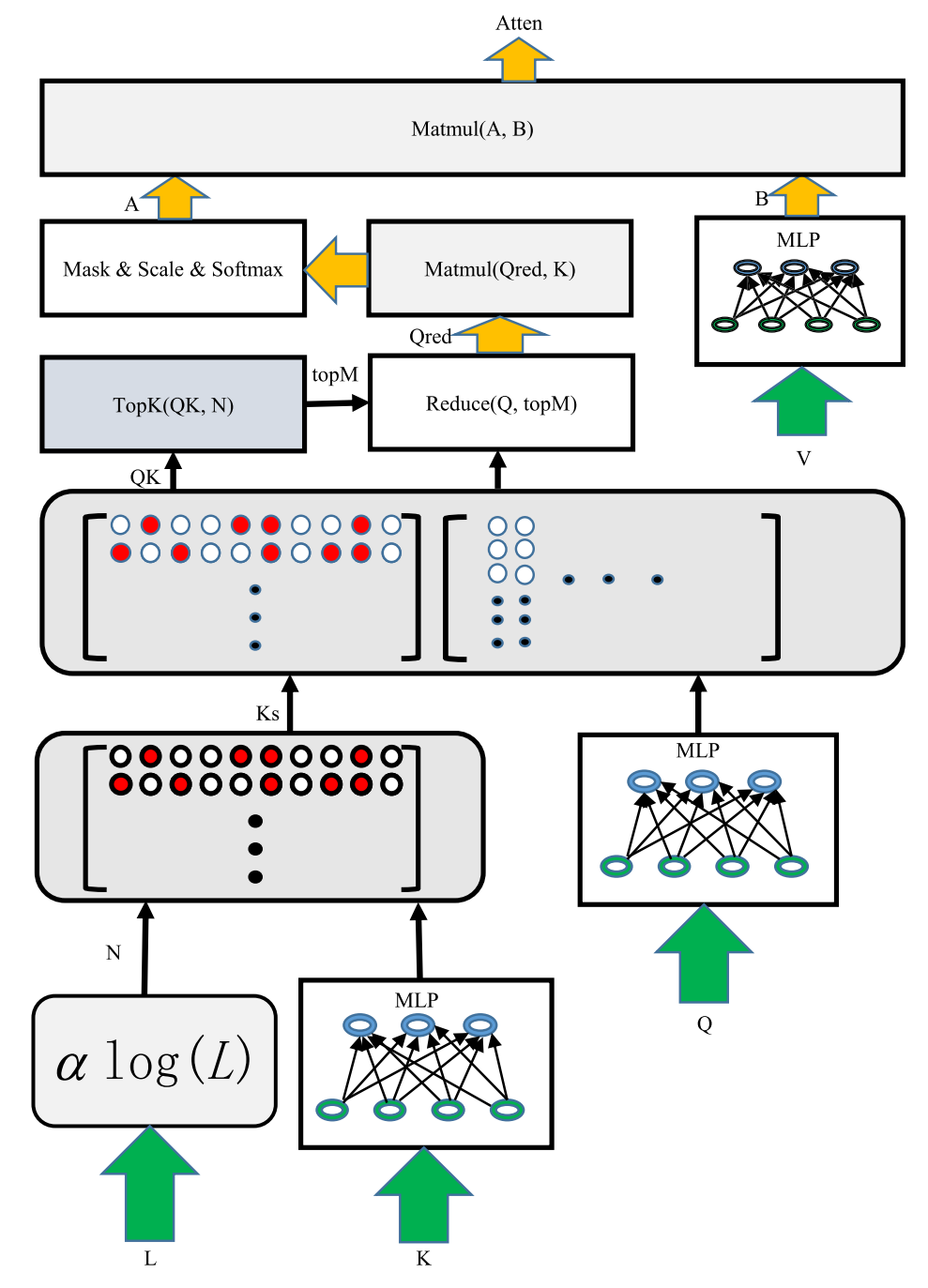

Tspformer architecture

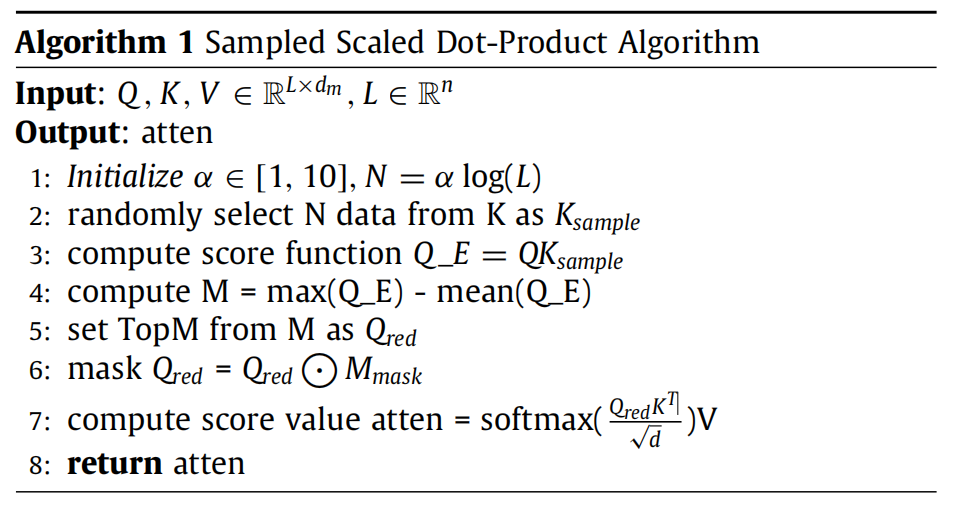

其中主要的注意力来自于点积值,可以对查询数据进行采样,从而使得矩阵乘法运算的数据减少。

采样

用矩阵乘法来求

再次计算

Encoder

Decoder

Model training with reinforcement learning

损失函数:

为了构造

Learning to Iteratively Solve Routing Problems with Dual-Aspect Collaborative Transformer

Yining Ma, Jingwen Li, Zhiguang Cao, Wen Song, Le Zhang, Zhenghua Chen, and Jing Tang. "Learning to Iteratively Solve Routing Problems with Dual-Aspect Collaborative Transformer.", Conference on Neural Information Processing Systems abs/2110.02544 (2021): 11096-11107.